Understanding Artificial Intelligence and Machine Learning: From Elephants to Transformers

How AI and ML differ and how they shape our world with transformer models.

Introduction

Have you ever wondered how a computer learns to recognize an animal? When you look at the picture below, you instantly see a family of elephants. To a computer, it’s just a grid of colored pixels with no meaning. Teaching machines to perceive the world the way we do lies at the heart of artificial intelligence (AI) and machine learning (ML). This blog unpacks these concepts, shows how ML differs from traditional programming, introduces the powerful Transformer architecture behind today’s language models, and explores real‑world AI applications.

What is Artificial Intelligence?

Artificial intelligence is the broad field of computer science focused on creating machines that mimic human intelligencegeeksforgeeks.org. The word artificial refers to something made by humans, while intelligence is the ability to learn, reason and self‑correctgeeksforgeeks.org. AI systems emulate skills such as learning, reasoning and self‑correction to perform tasks that normally require human intelligence, like decision‑making or language translationgeeksforgeeks.org.

AI is not a single technology but a collection of techniques. It includes rule‑based systems, knowledge‑based reasoning, and data‑driven approaches such as machine learning. Examples of AI in daily life include speech recognition systems, personalized recommendation engines, predictive maintenance tools and self‑driving carsgeeksforgeeks.org. Virtual assistants like Siri and Alexa use natural‑language processing (NLP) to interpret your requests and respond accordinglygeeksforgeeks.org.

What is Machine Learning?

Machine learning is a subset of AI that enables computers to learn patterns from data and improve with experiencegeeksforgeeks.org. Instead of manually programming every rule, ML algorithms are fed data and learn to make predictions or decisions without explicit instructions. It allows systems to adapt to new information and handle complex or evolving tasksgeeksforgeeks.org.

Examples of ML include image recognition (classifying objects in photos), speech recognition, sentiment analysis and recommendation systemsgeeksforgeeks.org. ML models can also flag spam emails, predict equipment failures and segment customers for targeted marketinggeeksforgeeks.org.

Traditional Programming vs Machine Learning

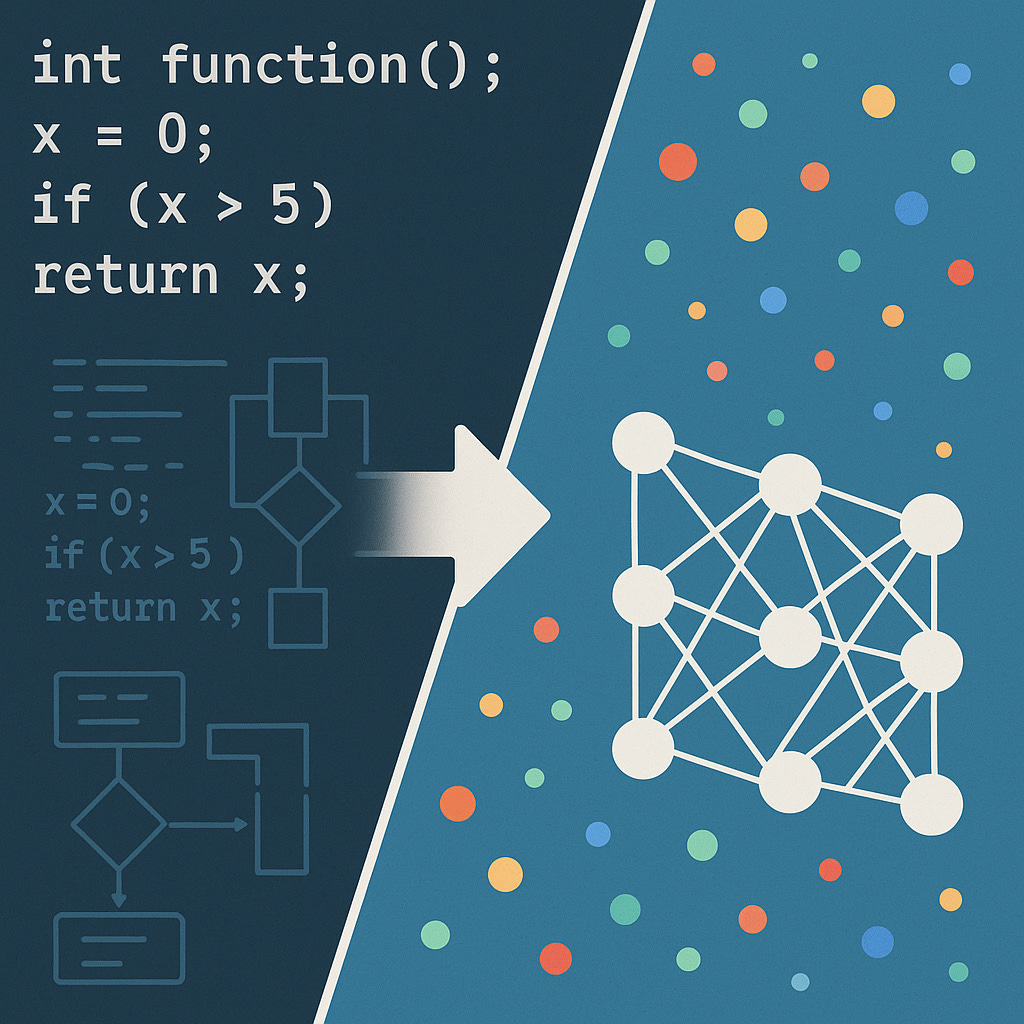

Traditional programming relies on explicitly coded rules to solve problems. Developers anticipate every possible scenario and write deterministic instructions. The same input always produces the same outputgeeksforgeeks.org. Changing requirements or data formats necessitate manual code updates, making traditional software rigidgeeksforgeeks.org.

Machine learning, by contrast, is data‑driven. Algorithms learn patterns from examples instead of following pre‑written rulesgeeksforgeeks.org. ML models produce probabilistic predictions that adapt as new data arrivesgeeksforgeeks.org. This flexibility makes ML ideal for tasks where explicit rules are hard to define, such as image recognition or language translationgeeksforgeeks.org.

The diagram below illustrates this contrast:

How Machine Learning Works

Data collection – Gather a large dataset relevant to the problem (e.g., labeled images or historical transactions).

Model training – Feed the data into an algorithm that learns the relationship between inputs and outputs.

Evaluation – Test the model on unseen data to measure accuracy and adjust parameters.

Deployment and adaptation – Use the trained model to make predictions on new data; retrain as more data becomes available.

ML models can be supervised (trained with labeled data), unsupervised (trained on unlabeled data to find patterns) or reinforcement‑based (learning through trial and error). Deep learning, a subset of ML, uses neural networks with many layers to extract complex patternsgeeksforgeeks.org.

The Transformer Revolution

One of the most influential architectures in modern AI is the Transformer. Introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need,” the Transformer has transformed natural‑language processing and computer visiongeeksforgeeks.org.

Why Transformers Are Needed

Earlier models like recurrent neural networks (RNNs) process text sequentially and struggle with long‑range dependencies due to the vanishing gradient problemgeeksforgeeks.org. Long Short‑Term Memory (LSTM) networks mitigate this issue but still analyze words one at a timegeeksforgeeks.org. In contrast, Transformers use self‑attention to process entire sequences in parallel, allowing them to capture context across a sentencegeeksforgeeks.org. This makes them far more effective at understanding relationships among words.

Core Concepts

Transformers consist of encoder and decoder layers that employ several key mechanisms:

Self‑Attention – Each word is mapped to query (Q), key (K) and value (V) vectors and compares itself to other words to determine which are most relevant. The attention scores are computed using a scaled dot product and softmax functiongeeksforgeeks.org. This mechanism allows the model to weigh the importance of words in a sequence.

Positional Encoding – Because Transformers process tokens in parallel, they need a way to represent the order of words. Positional encodings are added to token embeddings to provide location information within the sequencegeeksforgeeks.org.

Multi‑Head Attention – Multiple attention heads run in parallel, capturing different relationships and patterns in the datageeksforgeeks.org.

Feed‑Forward Networks – Position‑wise feed‑forward layers refine the encoded representation at each positiongeeksforgeeks.org.

Encoder‑Decoder Architecture – In tasks like translation, the encoder converts the input sequence into a latent representation and the decoder generates the output sequence, focusing on relevant parts of the input via encoder‑decoder attentiongeeksforgeeks.org.

These innovations enable models like BERT, GPT and Vision Transformers (ViTs) to achieve state‑of‑the‑art performance in language understanding, text generation, image classification, and moregeeksforgeeks.org.

The illustration below captures the essence of a transformer network:

Real‑World Applications of AI and ML

AI and ML are already transforming industries. Here are a few compelling applications:

Speech recognition & voice assistants – Systems like Siri or Alexa leverage deep learning algorithms to transcribe speech and understand commands, enabling hands‑free interactiongeeksforgeeks.org. They integrate with smart homes, cars and mobile devices.

Personalized recommendations – Streaming services and e‑commerce sites use AI to analyze your browsing and viewing history to suggest products or content you’ll likely enjoygeeksforgeeks.org.

Predictive maintenance – Manufacturers use AI to monitor sensor data and predict equipment failures before they occur, reducing downtime and costsgeeksforgeeks.org.

Medical diagnosis – AI systems analyze medical images and patient data to assist doctors in diagnosing diseases and planning treatmentsgeeksforgeeks.org.

Autonomous vehicles – Self‑driving cars combine computer vision, sensor fusion and deep learning to interpret their surroundings, make decisions about speed and direction, and plan safe routesgeeksforgeeks.org.

Fraud detection & risk assessment – Financial institutions apply ML algorithms to transaction data to identify unusual patterns indicative of fraud, evaluate credit risk or detect money launderinggeeksforgeeks.orggeeksforgeeks.org.

Natural language processing – Chatbots and translation services use ML and Transformer models to understand and generate human‑like text, enabling efficient customer support and bridging language barriersgeeksforgeeks.org.

These examples show that AI is not just theoretical; it’s a practical toolkit that enhances efficiency, accuracy and innovation across domains.

Looking Ahead: The Future of AI & ML

We are living through an AI renaissance. The Transformer architecture opened the door to large language models that can write essays, answer questions and even create images. Researchers continue to push the boundaries by exploring self‑supervised learning, multimodal models that combine text, images and audio, and edge AI that brings intelligence to mobile and embedded devices.

As AI systems become more capable, ethical considerations grow more important. Ensuring fairness, transparency and accountability in AI models will be crucial, especially in sensitive applications like healthcare, finance and law.

Conclusion

Artificial intelligence aims to make machines behave intelligently, while machine learning gives computers the ability to learn from data. The difference between traditional programming and ML lies in how rules are created: with ML, the computer infers patterns from examples rather than following explicit instructionsgeeksforgeeks.orggeeksforgeeks.org. The Transformer architecture’s self‑attention and parallel processing have revolutionized natural language processing and beyondgeeksforgeeks.org.

Whether it’s recognizing elephants in an image, translating languages or suggesting your next favorite song, AI and ML are transforming the way we live and work. By understanding these technologies, we can better appreciate the innovations shaping our present and future.