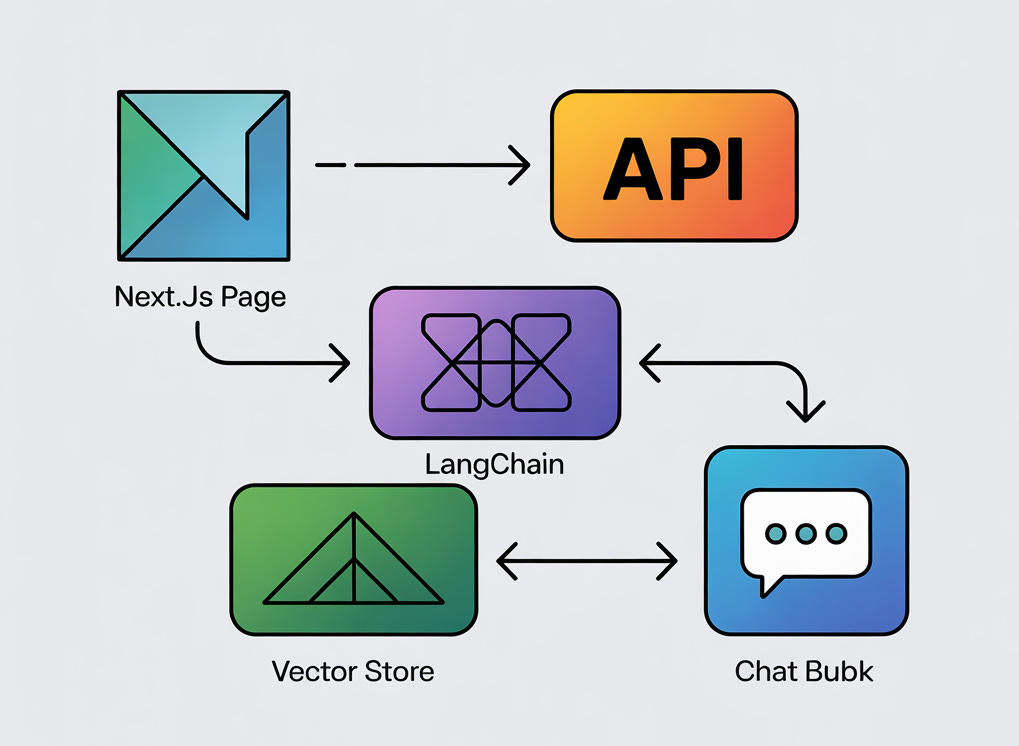

Build a Q&A Page in Next.js with LangChain.js and a Custom Vector Store

From setup to production tips: how to make your docs answer questions automatically.

Ever wished your docs could answer questions for you? With Next.js + LangChain.js, you can build a Q&A page that uses your docs or FAQs to generate instant answers. This is retrieval-augmented generation (RAG) in action.

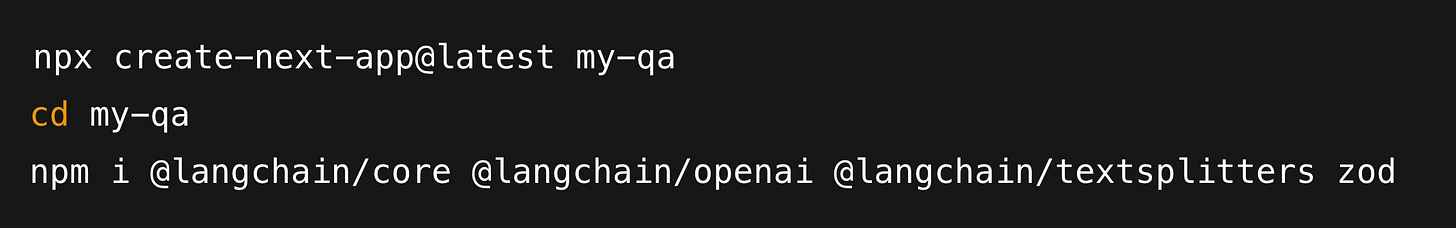

Step 1: Create a Next.js App & Install Dependencies

Add your API key in .env.local:

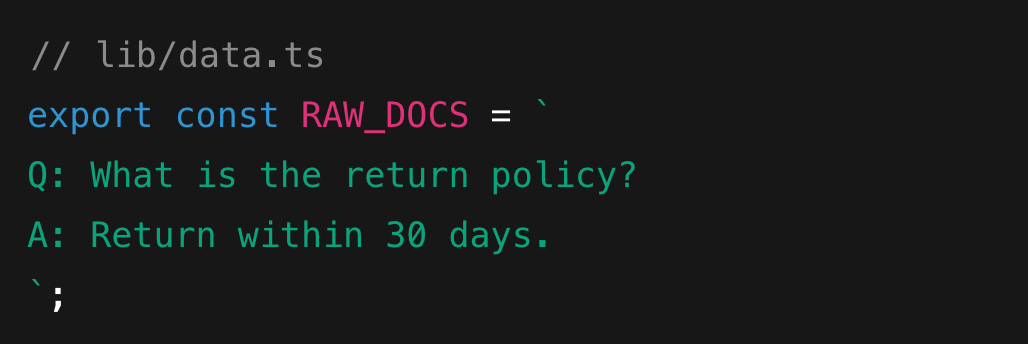

Step 2: Add Docs as Seed Data

💡 Replace with FAQs, README, or onboarding docs.

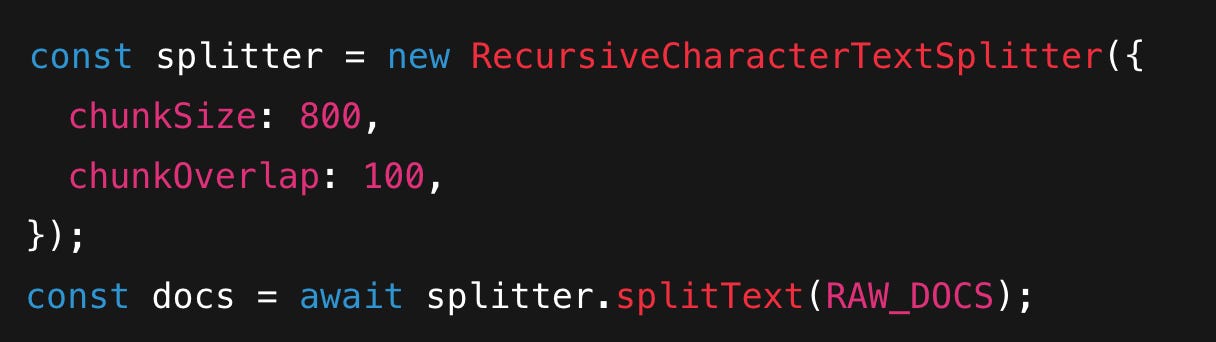

Step 3: Split Docs & Embed with OpenAI

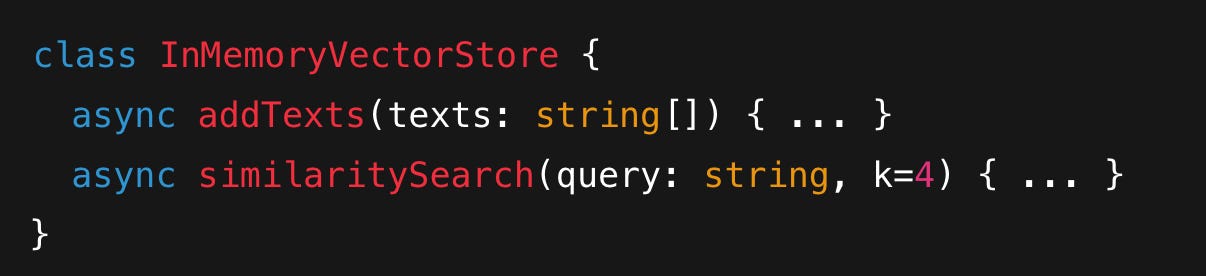

Step 4: Minimal Vector Store

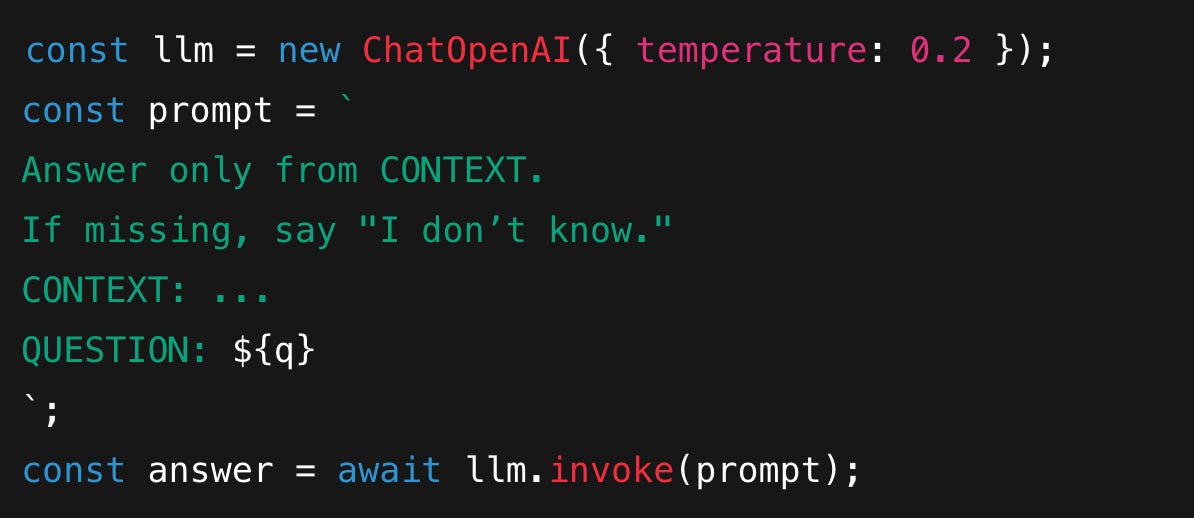

Step 5: Build a Retrieval-QA Chain

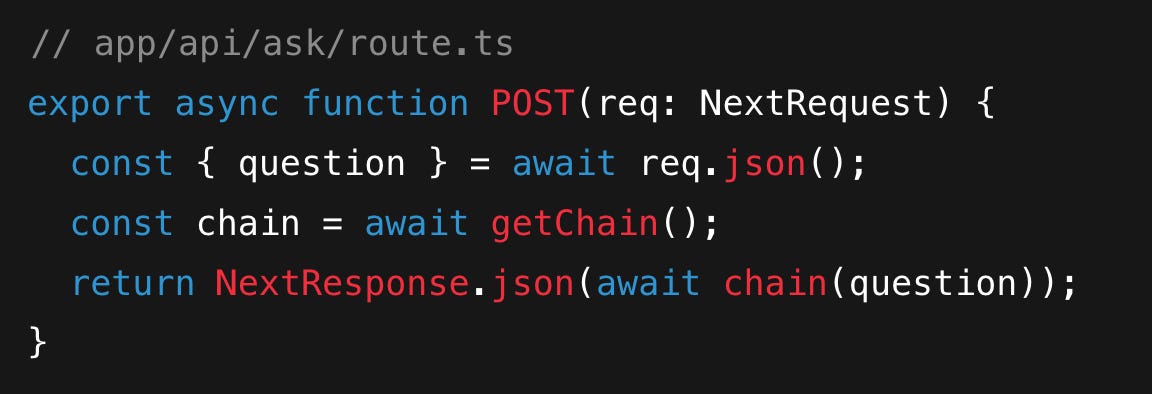

Step 6: API Route /api/ask

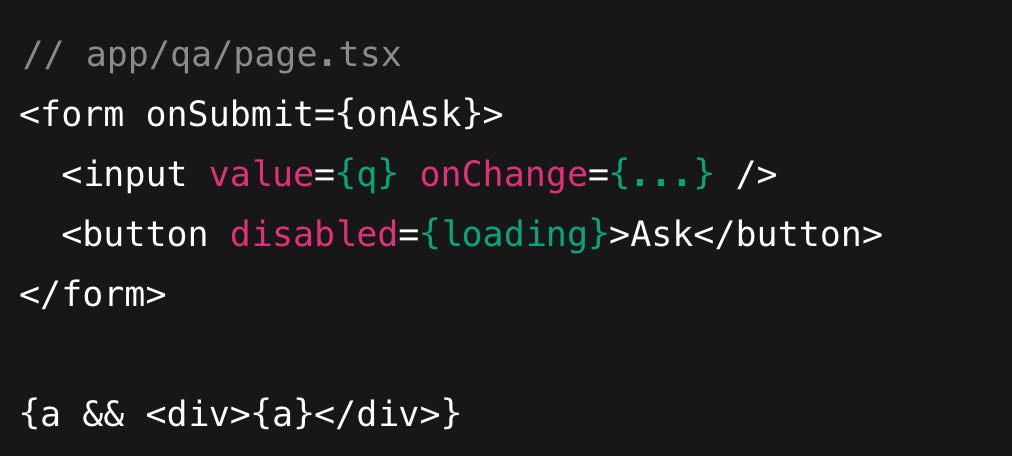

Step 7: Client Q&A Page

Step 8: Test Your App

✅ Ask a known question → correct answer

❌ Ask unrelated question → “I don’t know”

🖼️ Image Prompt: Browser window showing a developer typing a question into the app and receiving an answer.

Step 9: Gotchas to Watch Out For

Mixing embedding providers breaks results.

Too-small chunks lose context.

High temperature → hallucinations.

🖼️ Image Prompt: Warning icons (⚠️) next to chunks, embeddings, and temperature sliders.

Step 10: Production Upgrades

Persist Vectors

Stream Answers

Highlight Sources

Real-World Use Case

Upload your product FAQs or docs.

Customers ask: “Do you ship internationally?” → answered instantly.

Reduces support load, improves customer experience.

Conclusion

You’ve built a Next.js Q&A app with LangChain.js that can answer questions based on your docs. With production tweaks (DB persistence, streaming, highlighting), this goes from weekend project → production-ready feature.